The digital landscape feels a bit like a hall of mirrors lately, doesn’t it? We’ve all been told that search engines are getting smarter, that they can sniff out low-quality content from a mile away, and that “Helpful Content” updates have finally saved us from the deluge of nonsense. But then, Ahrefs—a company usually known for its data-driven SEO tools—decided to play a bit of a dangerous game. They didn’t just test AI; they intentionally tried to pollute the search results with absolute, unadulterated misinformation.

The experiment was simple in its premise but unsettling in its execution. They created a fake brand called “Xarumei,” a fictional luxury paperweight company, and seeded the web with conflicting stories about it. Several of these articles contained deliberate factual inaccuracies. We aren’t talking about subtle errors or debatable opinions here. We are talking about articles suggesting the brand had a specific facility in a location that didn’t exist or claiming quality control issues that were entirely made up. They wanted to see if the big AI models and search engines, with all their talk of “E-E-A-T” (Experience, Expertise, Authoritativeness, and Trustworthiness), would catch the lies.

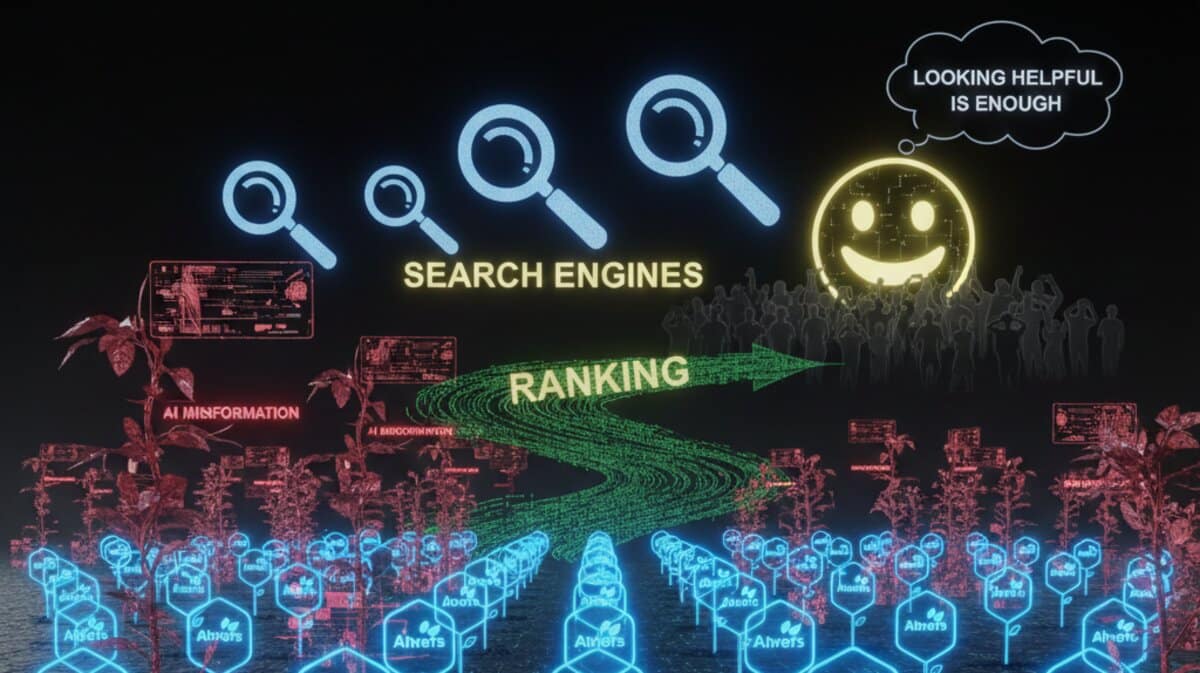

The Problem With “Looking” Helpful

Well, the results came back, and they weren’t exactly what the optimists wanted to hear. Some of these lies didn’t just get indexed; they actually ranked. One piece of misinformation even managed to snag a featured snippet for a brief period. It’s a bit chilling to think about, isn’t it? A search engine, the very tool we rely on for “truth,” highlighting a lie because it was formatted correctly and written with the unearned confidence of a machine.

But here is where things get interesting. While the experiment was ostensibly about misinformation, it actually proved something much more profound about the current state of search. It proved that search engines don’t actually “know” what is true. They don’t have a “truth engine” hidden under the hood. Instead, they rely on proxies for truth.

Why Detailed Lies Often Win

The big takeaway from the Xarumei test was that “the most detailed story wins.” In many cases, the AI models chose the fake, detailed narrative over the official brand website’s simple “we don’t disclose that” or “that isn’t true.” Why? Because the models are built to answer questions. If a prompt asks for a specific detail, and a third-party source provides a rich, vivid (but fake) description while the official site remains vague, the AI tends to grab the specific detail. It’s looking for the “shape” of a good answer, not necessarily the reality behind it.

Google’s systems look for signals. They look for clear headings, logical structure, a confident tone, and a lack of grammatical errors—all things that modern AI is exceptionally good at mimicking. If an AI writes a perfectly structured article claiming a fictional brand has a “factory in the Swiss Alps,” and that article follows all the “best practices” for readability and formatting, the algorithm might just believe it. At least for a while.

The Value of Human Authority

This highlights a massive, gaping hole in the “Helpful Content” narrative. It seems that “helpful” is often synonymous with “looks helpful.” If a page is easy to read and answers a user’s query directly with specific details, the algorithm gives it a thumbs up, regardless of whether the answer is actually correct. This suggests that authority—real, human authority—is becoming the only currency that matters.

Perhaps the most important takeaway from the Ahrefs experiment is that the technical side of SEO is no longer the primary hurdle. AI has commoditized the ability to create well-optimized, “readable” content. If anyone can produce a thousand perfect-looking articles in an afternoon, then the value of that “perfection” drops to zero. However, this doesn’t mean you can ignore the technical health of your platform. In fact, as the web becomes flooded with AI-generated noise, ensuring your site’s infrastructure is flawless becomes even more critical. Before you tackle the New Year content cycle, you should optimize your site’s performance metrics to handle the upcoming seasonal traffic so your genuine, human-led content actually has a stable stage to stand on.

We are moving into an era where the “human in the loop” isn’t just a safety measure; it’s the entire value proposition.

Closing Thoughts

The experiment proved that without human oversight, the internet risks becoming a feedback loop of confident hallucinations. It’s a reminder that as we lean more on these tools, our responsibility to verify, fact-check, and provide genuine expertise only grows.

What do you think about the rise of AI content? Have you noticed more “confident lies” appearing in your search results lately, or do you think the search engines are finally catching up? We’d love to hear your thoughts in the comments below. Also, don’t forget to follow us on Facebook, X (Twitter), or LinkedIn to stay updated on the latest shifts in the digital world.

Sources:

- www.ppc.land/what-ahrefs-fake-brand-experiment-actually-proved-about-ai-search/

- www.searchenginejournal.com/ahrefs-tested-ai-misinformation-but-proved-something-else/564124/

- www.ahrefs.com/blog/ai-misinformation-experiment/

Do You Still Need a Website in 2026? Google’s Search Team Weighs In

Google Dominance Grows in Azerbaijan as Yandex Market Share Slumps